Video recording of the talk:

Slideshow:

In human communication, explanations serve to increase understanding, overcome communication barriers, and build trust. They are, in most cases, dialogues. In computer science, AI explanations (“XAI”) map how an AI system expresses underlying logic, algorithmic processing, and data sources that make up its outputs. One-way communication.

How do we craft designs that "explain" concepts and respond to users’ intent? Can AI identify, elicit and apply relevant user contexts, to help us understand AI outputs? How do explanations become two-way?

We must create experiences with systems that will be required to respect user needs and dynamically explain logic and seek understanding. This is a significant challenge that, at its heart, needs UX leadership. The safety, trust, and understandability of systems we design hinge on the way we craft models for explanation.

Related resource

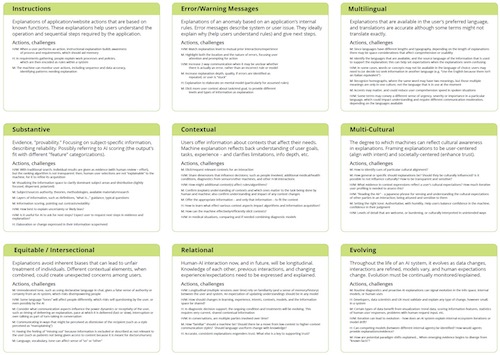

Types of Explanations

A poster (PDF) presented by Duane Degler at the 2023 Information Architecture Conference (updated 4.2024).

UXPA conference

This talk was presented at the 2024 edition of the annual conference of the User Experience Professionals Association (UXPA International).